If You’re Not Using Git LFS, You’re Already Behind!

The popularity of the Git version control system among developers has grown consistently over the last few years, with many Subversion users making the switch to Git’s ‘file system snapshot’ approach, which differs from the ‘file change logging’ approach of classic VCS software.

Git’s Little Problem – Large Files

However, Git was originally meant to handle source code and was never meant to store your binaries. When development teams used it for their large binary files, they experienced a massive drop in performance. The truth is that binaries don’t really belong in a version control system at all. Their presence however is informative and intuitive to developers. In Github’s own words, the workflows enabled by Git “haven’t always been practical for versioning large files”. What they meant is large binary files such as graphics, audio, video and even large data sets have been slowwww!

And Then Came Git LFS

In April of last year, GitHub announced Git Large File Storage (LFS), an enhancement that nicely solves the performance issues when these large binary files are a part of your project. Git LFS is a simple download and install and, once configured (which is also very straightforward), it is completely transparent, so you carry on working like you did before but just enjoy better performance. Your workflow stays exactly the same.

It’s flexible too. You can optimize Git LFS to suit your needs; media files can be integrated into your VCS file structure while you define whatever storage location makes sense for you – on local, remote or cloud servers.

The Git LFS Secret Sauce – Pointers Instead of Downloads

Git’s LFS achieves the benefits described above using pointers to the file locations, avoiding the need to move large physical files into the developer’s local working environment. It uses a SHA-1 checksum to identify whether the file’s contents have changed, something that can conserve storage space (it doesn’t take too many libraries of media or graphics files to make a dent in your available space).

Git LFS PRO TIPS – Getting the most out of Artifactory

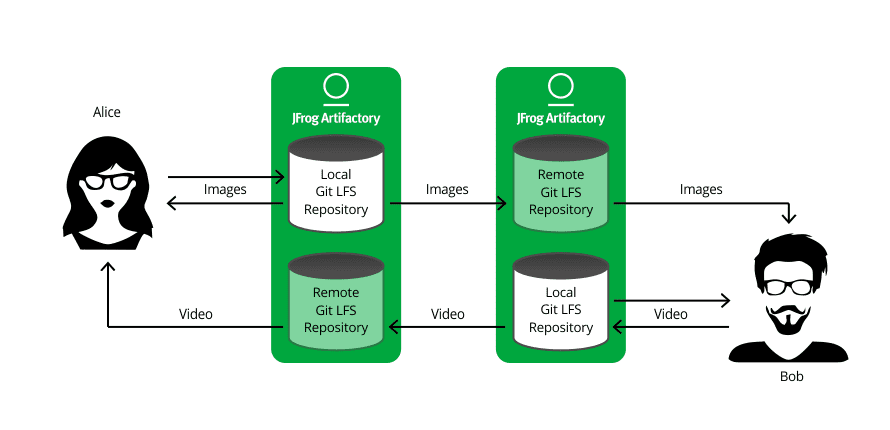

Artifactory has supported local Git LFS repositories for a while now giving you a place to manage your binary media assets within your local network, while keeping you independent of the network or 3rd party services. With the release of version 4.7, Artifactory also supports remote and virtual Git LFS repositories. Remote repositories give you a way to share binary media assets across your organization by proxying a Git LFS repository in another Artifactory instance. Say Alice and Bob are working on the same project, but are located in different countries. Alice develops image files, Bob develops video files. They both need access to each others files. Each of them uploads their own files to a local repository on their own respective Artifactory instance, and create a remote repository to proxy the other’s local one. Like this:

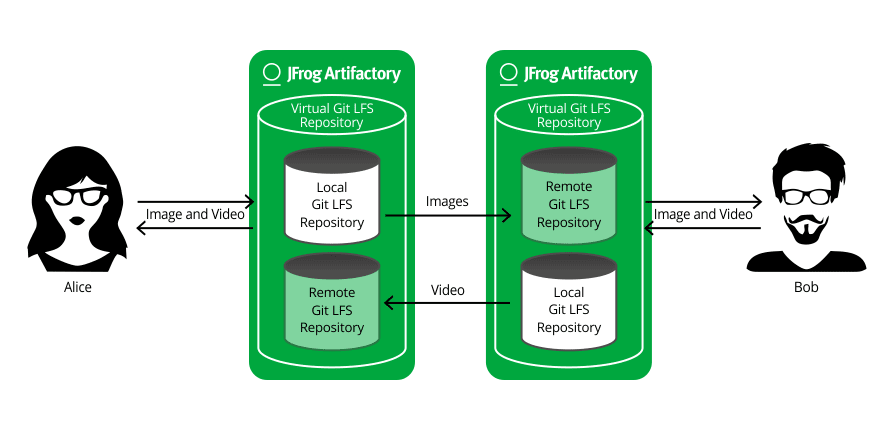

Virtual repositories make your work even simpler. By encapsulating your local and remote Git LFS repositories, you only need to configure your Git LFS client with a single URL, that of your virtual Git LFS repository in Artifactory, and work with that. The details of the underlying repositories are hidden. In our example, once Alice and Bob have each defined a virtual repository, their setup looks like this:

Now, if you take this scenario and expand it to 10 (or 100) developers working on the same project across 4 global sites, you start to understand the value and efficiency of working with remote and virtual Git LFS repositories in Artifactory. And that’s before we even go into the fine-grained access control or checksum-based storage Artifactory gives you.

In conclusion, if you are a Git user and you haven’t yet downloaded Git LFS, get back in front and download Git LFS now. From there, you’re just 5 minutes away from setting up Git LFS to work with Artifactory.