Push the Limits of Virtual Repositories

Our recent Developer and DevOps Trends 2015 survey showed that anyone using Docker also uses additional technologies. I’ll let you in on a little secret. That’s true in general, not just in relation to Docker. The vast majority of developers work with several different technologies at once. So most (if not all) of you are exposed to different packaging technologies and understand that they need to resolve dependencies and deploy artifacts. Now, for each technology this is implemented in a different way. For example, in Maven and Gradle, you can configure different end points for deployment and resolution. Conversely, some of the newer tools, such as Npm, have a built-in assumption that you are working with their central repository, so they assume you resolve and deploy using the same URL.

Now, the typical scenario in Artifactory is that you resolve dependencies from local and remote repositories (including the corresponding “official public” repository for each packaging technology), and upload your own artifacts to a local repository. But we asked ourselves, how can we provide a better user experience? Why do we have to expose this to all developers and make them configure their build tools with different repositories for resolution and deployment? Well, those days are over. Artifactory now hides all these details by allowing you to deploy artifacts to a virtual repository.

Remember virtual repositories?

For any package type, Artifactory let’s you define virtual repositories which aggregate several local and remote repositories of the same type. For example, let’s consider that you have the following Docker repositories defined in your system:

- docker-local

A local Docker repository where your development team deploys the Docker images they develop. - docker-hub-remote

A remote repository that proxies Docker Hub. - docker-virtual

A virtual Docker repository that aggregates docker-local and docker-hub-remote.

Anyone who is familiar with Artifactory knows that you can resolve Docker images from any of the local or remote repositories through the virtual repository. That’s what virtual repositories do. They hide the details of where you are resolving dependencies from giving the administrator the freedom to add to or remove from the underlying repositories as needed. So far, nothing new.

So what’s new?

Well, now, you can also deploy packages to a virtual repository. This means that developers only need to know about one URL for resolution and deployment of artifacts, and consequently they only need that one URL to configure their build tools. That’s one level of convenience. And when you consider that your virtual Docker repository is now a fully-fledged Docker registry, the next level of convenience is when have to configure a reverse proxy for Docker. Here too, needing only a single URL makes life easier for your local DevOps person.

But there’s more. Behind the scenes, the Artifactory administrator can also change the target repository, modify include and exclude patterns to enforce security policies, or update permissions on the underlying repositories to implement access restrictions. And all this is hidden from the developer who just needs to push and pull images without worrying about all these details.

How does it work?

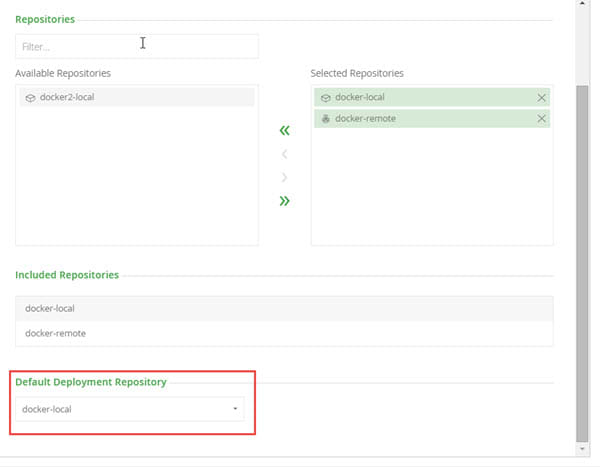

In your virtual repository configuration, just specify one of the local repositories that the virtual repository aggregates as the deployment target. That’s it.

Now, not only does your virtual repository manage from where dependencies may be resolved, it also manages to where artifacts are deployed. And all your development team needs to know about is this one virtual repository. All the other details are hidden.